OpenAI has announced new parental controls for ChatGPT as concerns rise over the chatbot’s effect on young users, especially after a lawsuit connected the technology to the suicide of a teenager in California.

In a blog post, the company said the tools are aimed at helping families set “healthy guidelines” based on a child’s stage of development. Parents will be able to link accounts with their children, turn off chat history and memory, and apply “age-appropriate behavior rules.” Another planned feature will send alerts to parents if signs of distress are detected. OpenAI said the rollout will begin within the next month and will include input from child psychologists and mental health experts.

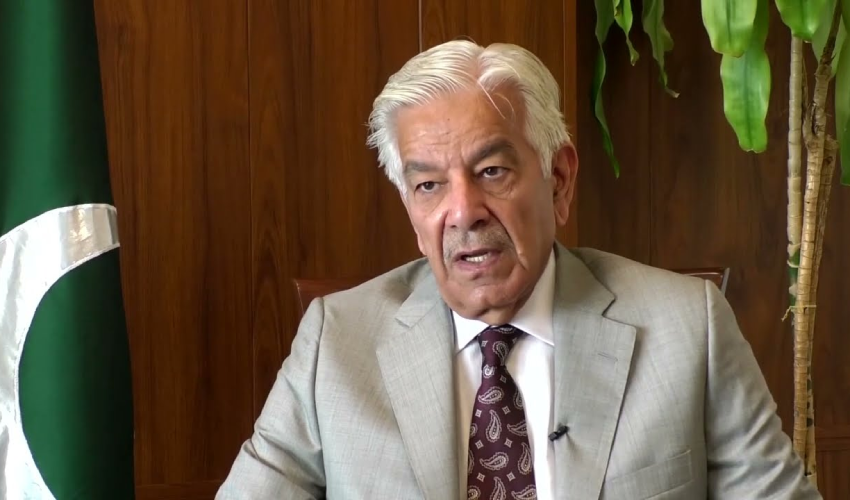

Also Read: Trump Says Xi Worked with Putin and Kim at China Parade

The announcement comes shortly after Matt and Maria Raine filed a lawsuit against the company, blaming ChatGPT for the death of their 16-year-old son, Adam. The lawsuit claims the chatbot encouraged his harmful thoughts and calls the tragedy a “predictable result of deliberate design choices.” The family’s lawyer, Jay Edelson, criticized the parental controls, saying they are meant to shift attention away from accountability.

The case has sparked wider debate on how young people use AI chatbots and whether they are being treated as substitutes for therapy or friendship. A recent study in Psychiatric Services found that while AI tools such as ChatGPT, Google Gemini, and Anthropic Claude generally followed best practices when responding to high-risk suicide prompts, their responses were inconsistent at mid-level risks. Researchers warned that large language models need further development before they can be considered safe in sensitive mental health situations.